Recent searches

Search options

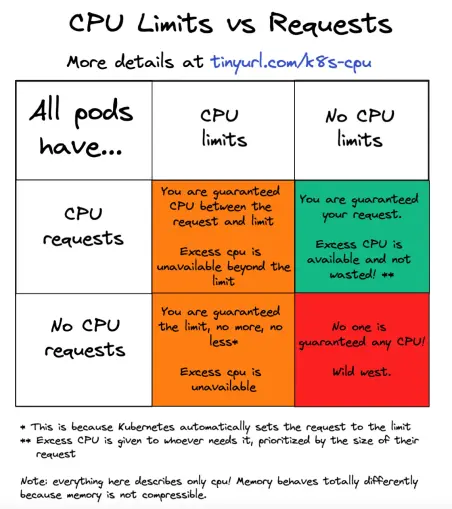

It's so counter intuitive, but cpu limits on #kubernetes, don't use them!

I'm right now fighting with this at dayjob. And again, it's due to misalignment over resource accounting.

@zeab limits have such a niche use case that it’s one of the very few things in kube you can do to shoot yourself in the foot. We went through this at my day job quite a while ago, addressing cpu throttling.

Requests are really the best way to go especially if you use any sort of autoscaling. Even then you can right size your workload requests to fit onto your nodes.

@elebertus that's been my sentiment as well. Outside of #kubernetes, other job orchestrators handles cpu limits how you would expect. But on k8s? It really likes to make you work.

For cpu limits in k8s to work, it has to be some batch job that is meant to be short lived executions with exact resource definition of running that same type of job. Everything else, nope.

@elebertus @zeab it is niche but in an multitenant environment, I’ve seen it used on workloads with a history of runaway errors to limit billing. That kind of bug should never make it to production so this would be a guard rail in a non-production environment.

@Basil404 @elebertus though knowing when you have such a problem is as important. And you wouldn't generally know cpu exhaustion right from the start. Especially if set prematurely.

I think on k8s, cpu limit tend to do more harm than good. It's not same as if it was #cgroupV2. Where limits act a resource alloc, but allow workload to share allocation until congestion hits.