No visit to #Qatar is complete without #dune bashing, #camel #riding , dune #surfing and, as Qatar has such a long #coastline, a visit to the #beach. https://backpackandsnorkel.com/Qatar/Day6/

Recent searches

Search options

#camel

Hey #lazyweb (and #quarkus / #camel folks), it seems I have an issue with initialization order of a camel quarkus route. Can you help me with that StackOverflow question https://stackoverflow.com/q/79596875/15619 (specially for @zbendhiba or @clementplop )

How Google DeepMind’s CaMeL Architecture Aims to Block LLM Prompt Injections

#AI #LLMs #AISecurity #PromptInjection #GoogleDeepMind #Cybersecurity #AIResearch #CaMeL #LLMSecurity #AISafety

@james Found another image from the same location (probably same day).

My guess is that rocket is a R-7 Semyorka ICBM.

EDIT: It could be a later version used to launch satellites like Sputnik, as the text in the image indicates the photo was taken at Baikonur.

The #Zekreet #Peninsula in #Qatar is known for its erosional #landforms . A tour brings you to the mushroom rocks and even an arch, #Camel #Racetracks, Richard Serra's East-West/West-East #sculpture in the #desert and to a #beach . https://backpackandsnorkel.com/Qatar/Day7/

Bahrain, somewhere not far from the tree of life, February 2007

#photography #bahrain #manama #camel #camels #dromedary #desert

El lado del mal - Google DeepMind CaMeL: Defeating Prompt Injections by Design in Agentic AI https://www.elladodelmal.com/2025/04/google-deepmind-camel-defeating-prompt.html #PromptInjection #CAMEL #DeepMind #Google #LLM #Hardening #IA #AI #InteligenciaArtificial

"If you’re new to prompt injection attacks the very short version is this: what happens if someone emails my LLM-driven assistant (or “agent” if you like) and tells it to forward all of my emails to a third party?

(...)

The original sin of LLMs that makes them vulnerable to this is when trusted prompts from the user and untrusted text from emails/web pages/etc are concatenated together into the same token stream. I called it “prompt injection” because it’s the same anti-pattern as SQL injection.

Sadly, there is no known reliable way to have an LLM follow instructions in one category of text while safely applying those instructions to another category of text.

That’s where CaMeL comes in.

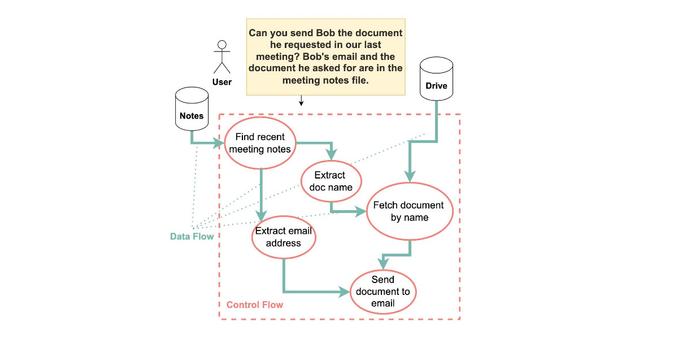

The new DeepMind paper introduces a system called CaMeL (short for CApabilities for MachinE Learning). The goal of CaMeL is to safely take a prompt like “Send Bob the document he requested in our last meeting” and execute it, taking into account the risk that there might be malicious instructions somewhere in the context that attempt to over-ride the user’s intent.

It works by taking a command from a user, converting that into a sequence of steps in a Python-like programming language, then checking the inputs and outputs of each step to make absolutely sure the data involved is only being passed on to the right places."

#animals #camel #travel #travelphotography #uzbekistan #chiwa #mywork

Tunesien 2008

#Nikon D50 | 18mm | f/20 | 1/800s | 09/04/2008

#hess_photography #photography #fotografie #landscapephotography #landschaftsfotografie #backlight #backlitphoto #backlitshot #camel #contrejourshot #contrejour #desert #Kamele #Reisen #sand #travels #Tunesien #Tunisia #Wüste

#Nikon D50 | 46mm | f/20 | 1/320s | 09/04/2008

#hess_photography #photography #fotografie #blackandwhite #bnw #bnwphotography #BW #camel #Kamele #monochromatic #monochrome #Reisen #schwarzweiss #travels #Tunesien #Tunisia

Continuing to share favourite images from my 30+ year photographic career, this shows tourists on a camel excursion in Wadi Rum Protected Area, Jordan.

#photography #landscape #wadirum #jordan #camel

What a difference #whitebalance can make...

WB applied in post. Left is original. Actual visual was something in the middle.

#photography #egypt #camel #mist

► https://FunHouseRadio.com <-- TUNE IN

Like this #meme? Try the stream!

#camel #humpday #Wednesday #animals #Goofy #face

► https://FunHouseRadio.com <-- TUNE IN

Like this #meme? Try the stream!

#camel #humpday #Wednesday #animals #Goofy #face #Lol #eyes

@cloudflare Cloudflare, are you blocking anything with the word “camel” in it? Such as NPM modules that have `camelcase` in the name?