Recent searches

Search options

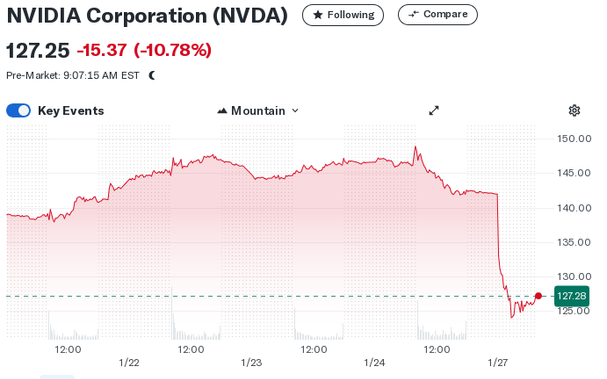

I had not realized just how bad the news of the DeepSeek chatbot had been for Nvidia's stock price. I suppose that placing all your bets on a bullshit technology that nobody wants has its downsides.

@gabrielesvelto In this case, this dip is because people are worried that a competitor will reduce NVIDIA's profits, no? Not about the fundamental technology?

@bhearsum I haven't read too much on the topic but it seems that the DeepSeek LLMs are roughly as good (or as bad) as the big bad OpenAI LLMs but need a fraction of the computational power... making Nvidia's future prospect look bad

@gabrielesvelto aaaaah, my bad, I clearly didn't read too much into it...

@gabrielesvelto @bhearsum I'm confused. I read that training that model costed just a few millions, but then I also read that this company has a stock of nVidia A100 chips worth hundreds of millions (assuming 15k/chip).

https://www.technologyreview.com/2025/01/24/1110526/china-deepseek-top-ai-despite-sanctions/

@gabrielesvelto @bhearsum they use chips from 2023, before export restrictions, and get seemingly better result

@gabrielesvelto @bhearsum and AMD wasting no time to capitalize on Nvidia's misfortune, gotta love it https://mastodon.social/@chromamagic.com@bsky.brid.gy/113901158019698233

@bhearsum

It makes no sense, new models will not reduce the demand for GPUs, the demand for GPUs will steadily rise in the coming years. It's just financial nonsense

@gabrielesvelto

@jaj @bhearsum there is no real demand. The entire AI bubble has been fueled by venture capitals. OpenAI which is the biggest fish in the market had 3.7 billion $ in revenue in 2024. That's peanuts. Twilio - a company that sends SMS as a business - made more than that. There's no money in AI and thus, there will be no demand once the VC funds dry up. And God knows what will happen to the existing datacenters once they become useless.

@gabrielesvelto

The big tech giants were ahead for the first round. Now smaller companies in every sector are following. I work in healthcare and we are currently buying GPUs. Next will be consumer devices because you want to run your LLM on your phone even when you have no network coverage. If you have more efficient models it will only enable more complicated models, not reduce demand. I think people can no longer live without LLMs

@bhearsum

@jaj @bhearsum if that were true people would be paying for them, but nobody is. The "artificial intelligence" market is minuscule and companies are trying to sleazily bundle LLMs into other products to force people pay for them even if they don't want to. Like what Microsoft did with Copilot. A successful product doesn't need those tricks to sell.

@gabrielesvelto

Maybe it depends on your background. I certainly see a lot of people around me who pay for premium openai accounts, especially in academia

@bhearsum

@jaj @gabrielesvelto lots of companies are paying too

@bhearsum @jaj that money amounts to peanuts, especially in the light of the amount of money that has already been sunk in developing this technology. Ed Zitron wrote a painfully detailed breakdown of OpenAI and Microsoft's AI revenues and the conclusion is that the money just isn't there: https://www.wheresyoured.at/oai-business/

@gabrielesvelto

Maybe you are right. In my domain of healthcare research I see that we went from linear models to decision trees and now to deep neural networks with huge amounts of data to build predictive models and you need a lot of GPUs for this. I expect other industries see the same evolution. On top of that, no other vendor except NVIDIA was able to get a foothold in the GPGPU business so I think for the years to come their sales will do fine

@bhearsum

@bhearsum @gabrielesvelto It's that the competitor (also garbage but that doesn't matter) doesn't need obscene numbers of GPUs and coal power plants to get the same (garbage) results.